AI Inference Explained Simply: What Developers Really Need to Know

Last updated: December 21, 2025 Read in fullscreen view

- 06 Dec 2025

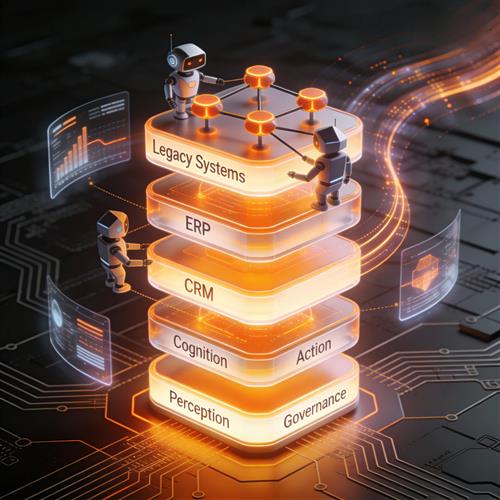

Enterprise Operations 2.0: Why AI Agents Are Replacing Traditional Automation 50/85

Enterprise Operations 2.0: Why AI Agents Are Replacing Traditional Automation 50/85 - 25 Nov 2025

How AI Agents Are Redefining Enterprise Automation and Decision-Making 46/96

How AI Agents Are Redefining Enterprise Automation and Decision-Making 46/96 - 17 Jul 2023

What Is SSL? A Simple Explanation Even a 10-Year-Old Can Understand 43/121

What Is SSL? A Simple Explanation Even a 10-Year-Old Can Understand 43/121 - 06 Nov 2025

Top 10 AI Development Companies in the USA to Watch in 2026 42/91

Top 10 AI Development Companies in the USA to Watch in 2026 42/91 - 05 Jul 2020

What is Sustaining Software Engineering? 39/1302

What is Sustaining Software Engineering? 39/1302 - 01 Jul 2025

The Hidden Costs of Not Adopting AI Agents: Risk of Falling Behind 38/164

The Hidden Costs of Not Adopting AI Agents: Risk of Falling Behind 38/164 - 02 Dec 2025

The Question That Shook Asia: What Happens When We Ask AI to Choose Between a Mother and a Wife? 38/64

The Question That Shook Asia: What Happens When We Ask AI to Choose Between a Mother and a Wife? 38/64 - 28 Nov 2025

How AI Will Transform Vendor Onboarding and Seller Management in 2026 30/82

How AI Will Transform Vendor Onboarding and Seller Management in 2026 30/82 - 01 Mar 2023

What is Unit Testing? Pros and cons of Unit Testing? 29/439

What is Unit Testing? Pros and cons of Unit Testing? 29/439 - 05 Jun 2025

How AI-Driven Computer Vision Is Changing the Face of Retail Analytics 26/135

How AI-Driven Computer Vision Is Changing the Face of Retail Analytics 26/135 - 07 Nov 2025

Online vs. Offline Machine Learning Courses in South Africa: Which One Should You Pick? 25/70

Online vs. Offline Machine Learning Courses in South Africa: Which One Should You Pick? 25/70 - 23 Dec 2024

Garbage In, Megabytes Out (GIMO): How to Rise Above AI Slop and Create Real Signal 24/61

Garbage In, Megabytes Out (GIMO): How to Rise Above AI Slop and Create Real Signal 24/61 - 25 Dec 2025

What Is Algorithmic Fairness? Who Determines the Value of Content: Humans or Algorithms? 23/47

What Is Algorithmic Fairness? Who Determines the Value of Content: Humans or Algorithms? 23/47 - 21 Nov 2025

The Rise of AgentOps: How Enterprises Are Managing and Scaling AI Agents 22/69

The Rise of AgentOps: How Enterprises Are Managing and Scaling AI Agents 22/69 - 20 Mar 2022

What is a Multi-Model Database? Pros and Cons? 21/1164

What is a Multi-Model Database? Pros and Cons? 21/1164 - 24 Dec 2024

Artificial Intelligence and Cybersecurity: Building Trust in EFL Tutoring 20/180

Artificial Intelligence and Cybersecurity: Building Trust in EFL Tutoring 20/180 - 17 Mar 2025

Integrating Salesforce with Yardi: A Guide to Achieving Success in Real Estate Business 19/202

Integrating Salesforce with Yardi: A Guide to Achieving Success in Real Estate Business 19/202 - 09 Jul 2024

What Is Artificial Intelligence and How Is It Used Today? 18/243

What Is Artificial Intelligence and How Is It Used Today? 18/243 - 12 Jan 2026

Companies Developing Custom AI Models for Brand Creative: Market Landscape and Use Cases 18/29

Companies Developing Custom AI Models for Brand Creative: Market Landscape and Use Cases 18/29 - 29 Oct 2024

Top AI Tools and Frameworks You’ll Master in an Artificial Intelligence Course 17/385

Top AI Tools and Frameworks You’ll Master in an Artificial Intelligence Course 17/385 - 03 Jul 2022

What is the difference between Project Proposal and Software Requirements Specification (SRS) in software engineering? 17/1025

What is the difference between Project Proposal and Software Requirements Specification (SRS) in software engineering? 17/1025 - 31 Dec 2021

What is a Data Pipeline? 16/215

What is a Data Pipeline? 16/215 - 10 Apr 2022

What is predictive analytics? Why it matters? 15/192

What is predictive analytics? Why it matters? 15/192 - 17 Oct 2025

MLOps vs AIOps: What’s the Difference and Why It Matters 15/100

MLOps vs AIOps: What’s the Difference and Why It Matters 15/100 - 10 Nov 2025

Multi-Modal AI Agents: Merging Voice, Text, and Vision for Better CX 15/98

Multi-Modal AI Agents: Merging Voice, Text, and Vision for Better CX 15/98 - 25 Apr 2021

What is outstaffing? 14/270

What is outstaffing? 14/270 - 22 Sep 2022

Why is it important to have a “single point of contact (SPoC)” on an IT project? 14/940

Why is it important to have a “single point of contact (SPoC)” on an IT project? 14/940 - 30 Jan 2022

What Does a Sustaining Engineer Do? 14/617

What Does a Sustaining Engineer Do? 14/617 - 13 Nov 2021

What Is Bleeding Edge Technology? Are bleeding edge technologies cheaper? 13/539

What Is Bleeding Edge Technology? Are bleeding edge technologies cheaper? 13/539 - 24 Oct 2025

AI Agents in SaaS Platforms: Automating User Support and Onboarding 12/77

AI Agents in SaaS Platforms: Automating User Support and Onboarding 12/77 - 06 May 2025

How Machine Learning Is Transforming Data Analytics Workflows 10/187

How Machine Learning Is Transforming Data Analytics Workflows 10/187 - 22 Sep 2025

Why AI Is Critical for Accelerating Drug Discovery in Pharma 8/83

Why AI Is Critical for Accelerating Drug Discovery in Pharma 8/83 - 21 Aug 2024

What is Singularity and Its Impact on Businesses? 8/403

What is Singularity and Its Impact on Businesses? 8/403 - 04 Oct 2023

The Future of Work: Harnessing AI Solutions for Business Growth 7/275

The Future of Work: Harnessing AI Solutions for Business Growth 7/275 - 15 Apr 2024

Weights & Biases: The AI Developer Platform 7/189

Weights & Biases: The AI Developer Platform 7/189 - 21 Apr 2025

Agent AI in Multimodal Interaction: Transforming Human-Computer Engagement 7/188

Agent AI in Multimodal Interaction: Transforming Human-Computer Engagement 7/188 - 27 Aug 2025

How AI Consulting Is Driving Smarter Diagnostics and Hospital Operations 7/101

How AI Consulting Is Driving Smarter Diagnostics and Hospital Operations 7/101 - 29 Aug 2025

How AI Is Transforming Modern Management Science 5/46

How AI Is Transforming Modern Management Science 5/46 - 05 Aug 2024

Affordable Tech: How Chatbots Enhance Value in Healthcare Software 2/169

Affordable Tech: How Chatbots Enhance Value in Healthcare Software 2/169

If your Large Language Model (LLM) feature feels slow, expensive, or unpredictable, chances are the problem isn’t the model itself-it’s inference. Understanding AI inference is one of the fastest ways for developers to ship better products, reduce costs, and hit performance targets.

This article breaks down AI inference in simple terms and explains when it actually matters, especially if you’re building real-world applications with LLMs.

What Is AI Inference?

AI inference is the moment when a model turns your input into an output.

You send text, numbers, or tokens into a model. The model processes them and returns tokens as a response. Everything users experience-latency, cost, and reliability-happens during this step.

In a managed model world (such as GPT or Claude hosted on external platforms), inference feels invisible. You send a prompt, get a response, and move on. The complexity is hidden behind a polished API and massive infrastructure.

For many teams, this is perfectly fine-and often the fastest path to value.

When Should Developers Care About Inference?

Inference starts to matter the moment model speed or cost becomes visible in your product.

You’ll need to think seriously about inference when:

- Your feature has strict latency requirements (autocomplete, chat, real-time UX)

- Costs grow rapidly with usage and need to scale predictably

- Data cannot leave a specific region or cloud environment

- Legal or compliance rules prevent calling external APIs

- You need offline, edge, or on-device AI

- You want custom behavior beyond what general-purpose models offer

In these cases, inference is no longer someone else’s problem-you own it.

Managed Models vs. Models You Control

With managed models:

- You focus on prompts, tools, and product logic

- Infrastructure, scaling, and optimization are handled for you

- Latency and cost are abstracted away

With self-hosted or open-source models:

- You still send prompts and receive tokens

- But now speed, cost, and reliability are tunable

- Inference becomes a first-class engineering concern

This is where understanding inference pays off.

The Two Phases of Model Inference

Think of inference as having two distinct phases:

1. Prefill (Reading the Input)

- The model reads your prompt and context

- This phase is compute- and memory-heavy

- Long prompts slow everything down before the first token appears

2. Decode (Generating the Output)

- The model produces tokens one by one

- Latency here is felt as “typing speed”

- Small delays stack up and become noticeable

Knowing which phase is slow tells you exactly where to optimize.

What You Can Control in AI Inference

As a developer, you have control over several key levers:

1. Model Choice

- Use instruction-tuned models to reduce prompt length

- Choose the smallest model that meets your quality bar

- Consider distilled models that retain quality with less compute

- Mixture-of-Experts models activate only what’s needed per request

Smaller, well-tuned models often feel faster and cheaper than massive ones.

2. Prompt Design

- Every token you send must be processed

- Long prompts slow prefill immediately

- Be direct, precise, and minimal

- Include only relevant context

- Ask for specific formats

This is the cheapest optimization you’ll ever make-and it works everywhere.

3. Hardware and Placement

- GPUs are great for steady, heavy workloads

- CPUs work well for small models and bursty traffic

- Keep models close to your app and data

- For instant feedback, run small models at the edge

- Let larger models refine results in the background

Reducing network distance often matters more than raw compute.

4. Serving and Batching

- Efficient servers keep hardware busy

- Group requests when possible (batching)

- Keep batches small for interactive apps

- Use larger batches for offline or background jobs

Think of batching like carpooling-fewer empty seats, better efficiency.

5. Caching and Memory Reuse

- Reuse tokens already processed in previous turns

- Avoid re-reading context the model has already seen

- Cache identical inputs and retrieval results

- Page attention history for long contexts

Caching removes work you’ve already paid for.

6. Quantization

- Compress model weights to use fewer bits

- 8-bit or even 4-bit weights reduce memory and improve speed

- Quality often stays the same for real-world tasks

- If quality drops, roll back one step

This is one of the most powerful inference optimizations available.

Measuring What Actually Matters

To optimize inference, you need the right signals:

- Separate prefill time from decode time

- Track tokens per second, memory usage, and device utilization

- Watch tail latency, not just averages

- Set token budgets per feature or tenant to control costs

What you measure determines what you can improve.

A Simple Mental Model for Debugging Inference

Use this checklist:

- Slow before first token?

→ Prompt too long, model too big, or too far away - Slow after tokens start streaming?

→ Decode is slow; stream tokens, increase utilization, or use draft-and-verify - Running out of memory?

→ Reduce context, quantize weights, or page attention - High tail latency?

→ Avoid cold starts, keep warm pools, pin workloads to regions

This framework works across almost any stack.

Final Thoughts

AI inference is not an abstract concept-it’s where user experience, performance, and cost converge.

If you’re happy with managed APIs, that’s a perfectly valid choice. But when speed, scale, or control start to matter, understanding inference becomes a competitive advantage.

Once you grasp how inference works, you stop guessing-and start engineering AI systems that are fast, efficient, and predictable.

![Best IT Outsourcing Companies in Vietnam with Reviews 2023 Top 10 Vietnam IT Outsourcing Vendors [MOST UPDATED] - TIGO CONSULTING](/Uploads/Vietnam12012023111455_thumb.jpg)

Link copied!

Link copied!

Recently Updated News

Recently Updated News