Agent AI in Multimodal Interaction: Transforming Human-Computer Engagement

Last updated: May 18, 2025 Read in fullscreen view

- 05 Oct 2025

The New Facebook Algorithm: A Paradigm Shift in Content Discovery 66/109

The New Facebook Algorithm: A Paradigm Shift in Content Discovery 66/109 - 12 Dec 2025

FlexClip AI Video Magic Review: Professional AI-Powered Video Editing 62/97

FlexClip AI Video Magic Review: Professional AI-Powered Video Editing 62/97 - 01 May 2023

Understanding Business as Usual (BAU) and How to Transition 52/930

Understanding Business as Usual (BAU) and How to Transition 52/930 - 06 Dec 2025

Enterprise Operations 2.0: Why AI Agents Are Replacing Traditional Automation 48/83

Enterprise Operations 2.0: Why AI Agents Are Replacing Traditional Automation 48/83 - 25 Nov 2025

How AI Agents Are Redefining Enterprise Automation and Decision-Making 46/96

How AI Agents Are Redefining Enterprise Automation and Decision-Making 46/96 - 06 Nov 2025

Top 10 AI Development Companies in the USA to Watch in 2026 41/90

Top 10 AI Development Companies in the USA to Watch in 2026 41/90 - 02 Dec 2025

The Question That Shook Asia: What Happens When We Ask AI to Choose Between a Mother and a Wife? 37/63

The Question That Shook Asia: What Happens When We Ask AI to Choose Between a Mother and a Wife? 37/63 - 01 Jul 2025

The Hidden Costs of Not Adopting AI Agents: Risk of Falling Behind 37/163

The Hidden Costs of Not Adopting AI Agents: Risk of Falling Behind 37/163 - 03 Oct 2025

Top CMS Trends 2026: The Future of Digital Content Management 36/54

Top CMS Trends 2026: The Future of Digital Content Management 36/54 - 20 Dec 2025

The Future of IT Consulting: Key Trends for 2026–2030 35/67

The Future of IT Consulting: Key Trends for 2026–2030 35/67 - 03 Nov 2023

Why Is Billable Viable Product An Alternative To Minimum Viable Product? 31/200

Why Is Billable Viable Product An Alternative To Minimum Viable Product? 31/200 - 16 Oct 2025

AI Inference Explained Simply: What Developers Really Need to Know 30/58

AI Inference Explained Simply: What Developers Really Need to Know 30/58 - 28 Nov 2025

How AI Will Transform Vendor Onboarding and Seller Management in 2026 30/82

How AI Will Transform Vendor Onboarding and Seller Management in 2026 30/82 - 02 Oct 2022

The Real Factors Behind Bill Gates’ Success: Luck, Skills, or Connections? 28/361

The Real Factors Behind Bill Gates’ Success: Luck, Skills, or Connections? 28/361 - 10 Sep 2024

Leading Remote Teams in Hybrid Work Environments 27/160

Leading Remote Teams in Hybrid Work Environments 27/160 - 05 Jun 2025

How AI-Driven Computer Vision Is Changing the Face of Retail Analytics 26/135

How AI-Driven Computer Vision Is Changing the Face of Retail Analytics 26/135 - 07 Nov 2025

Online vs. Offline Machine Learning Courses in South Africa: Which One Should You Pick? 25/70

Online vs. Offline Machine Learning Courses in South Africa: Which One Should You Pick? 25/70 - 16 Oct 2024

7 Game-Changing Features of InstaDoodle: The Ultimate AI Doodle Video Maker 24/62

7 Game-Changing Features of InstaDoodle: The Ultimate AI Doodle Video Maker 24/62 - 25 Dec 2025

What Is Algorithmic Fairness? Who Determines the Value of Content: Humans or Algorithms? 23/47

What Is Algorithmic Fairness? Who Determines the Value of Content: Humans or Algorithms? 23/47 - 23 Dec 2024

Garbage In, Megabytes Out (GIMO): How to Rise Above AI Slop and Create Real Signal 23/59

Garbage In, Megabytes Out (GIMO): How to Rise Above AI Slop and Create Real Signal 23/59 - 31 Dec 2025

10 Skills to Make You "Irreplaceable" in the Next 3 Years (even if AI changes everything) 22/34

10 Skills to Make You "Irreplaceable" in the Next 3 Years (even if AI changes everything) 22/34 - 21 Nov 2025

The Rise of AgentOps: How Enterprises Are Managing and Scaling AI Agents 22/69

The Rise of AgentOps: How Enterprises Are Managing and Scaling AI Agents 22/69 - 11 Oct 2022

Why choose Billable Viable Product (BVP) over Minimum Viable Product (MVP) 22/361

Why choose Billable Viable Product (BVP) over Minimum Viable Product (MVP) 22/361 - 24 Dec 2024

Artificial Intelligence and Cybersecurity: Building Trust in EFL Tutoring 19/179

Artificial Intelligence and Cybersecurity: Building Trust in EFL Tutoring 19/179 - 23 Jun 2025

AI Avatars in the Metaverse: How Digital Beings Are Redefining Identity and Social Interaction 18/125

AI Avatars in the Metaverse: How Digital Beings Are Redefining Identity and Social Interaction 18/125 - 21 Dec 2023

Top 12 Low-Code Platforms To Use in 2024 18/1248

Top 12 Low-Code Platforms To Use in 2024 18/1248 - 09 Jul 2024

What Is Artificial Intelligence and How Is It Used Today? 18/243

What Is Artificial Intelligence and How Is It Used Today? 18/243 - 13 Oct 2025

Dora AI: Turn Prompts Into Pixel-Perfect Websites-No Code, No Limits 18/63

Dora AI: Turn Prompts Into Pixel-Perfect Websites-No Code, No Limits 18/63 - 12 Jan 2026

Companies Developing Custom AI Models for Brand Creative: Market Landscape and Use Cases 17/28

Companies Developing Custom AI Models for Brand Creative: Market Landscape and Use Cases 17/28 - 18 Aug 2024

The Future of Web Development: Emerging Trends and Technologies Every Developer Should Know 17/201

The Future of Web Development: Emerging Trends and Technologies Every Developer Should Know 17/201 - 29 Oct 2024

Top AI Tools and Frameworks You’ll Master in an Artificial Intelligence Course 17/385

Top AI Tools and Frameworks You’ll Master in an Artificial Intelligence Course 17/385 - 22 Nov 2024

The Role of AI in Enhancing Business Efficiency and Decision-Making 16/195

The Role of AI in Enhancing Business Efficiency and Decision-Making 16/195 - 20 Feb 2025

How Machine Learning is Shaping the Future of Digital Advertising 16/123

How Machine Learning is Shaping the Future of Digital Advertising 16/123 - 02 Dec 2024

The Intersection of AI and Business Analytics: Key Concepts to Master in Your Business Analytics Course 15/295

The Intersection of AI and Business Analytics: Key Concepts to Master in Your Business Analytics Course 15/295 - 31 Dec 2022

The New Normal for Software Development 15/364

The New Normal for Software Development 15/364 - 17 Oct 2025

MLOps vs AIOps: What’s the Difference and Why It Matters 15/100

MLOps vs AIOps: What’s the Difference and Why It Matters 15/100 - 18 Jul 2024

The 8 Best ways to Innovate your SAAS Business Model in 2024 14/256

The 8 Best ways to Innovate your SAAS Business Model in 2024 14/256 - 27 Jul 2024

Positive Psychology in the Digital Age: Future Directions and Technologies 14/407

Positive Psychology in the Digital Age: Future Directions and Technologies 14/407 - 31 Dec 2023

Software Development Outsourcing Trends to Watch Out for in 2024 13/233

Software Development Outsourcing Trends to Watch Out for in 2024 13/233 - 10 Nov 2025

Multi-Modal AI Agents: Merging Voice, Text, and Vision for Better CX 13/96

Multi-Modal AI Agents: Merging Voice, Text, and Vision for Better CX 13/96 - 24 Oct 2025

AI Agents in SaaS Platforms: Automating User Support and Onboarding 12/77

AI Agents in SaaS Platforms: Automating User Support and Onboarding 12/77 - 20 Aug 2025

What Is Agentic AI? The Next Phase of Artificial Intelligence 12/149

What Is Agentic AI? The Next Phase of Artificial Intelligence 12/149 - 17 Jun 2021

What is IT-business alignment? 12/374

What is IT-business alignment? 12/374 - 09 Oct 2024

Short-Form Video Advertising: The Secret to Captivating Your Audience 12/134

Short-Form Video Advertising: The Secret to Captivating Your Audience 12/134 - 25 Jan 2025

The Decline of Traditional SaaS and the Rise of AI-first Applications 12/109

The Decline of Traditional SaaS and the Rise of AI-first Applications 12/109 - 06 May 2025

How Machine Learning Is Transforming Data Analytics Workflows 10/187

How Machine Learning Is Transforming Data Analytics Workflows 10/187 - 16 Sep 2022

Examples Of Augmented Intelligence In Today’s Workplaces Shaping the Business as Usual 10/436

Examples Of Augmented Intelligence In Today’s Workplaces Shaping the Business as Usual 10/436 - 03 Jan 2024

Why Partnership is important for Growth? 10/159

Why Partnership is important for Growth? 10/159 - 03 May 2024

The Iceberg of Ignorance 10/403

The Iceberg of Ignorance 10/403 - 10 Sep 2024

AI in Email Marketing: Personalization and Automation 10/182

AI in Email Marketing: Personalization and Automation 10/182 - 19 Dec 2023

How AI is Transforming Software Development? 9/294

How AI is Transforming Software Development? 9/294 - 10 Jan 2024

Like for Like – how to preserves existing business and leverage technological advancement 9/389

Like for Like – how to preserves existing business and leverage technological advancement 9/389 - 16 Aug 2022

What is a Headless CMS? 8/272

What is a Headless CMS? 8/272 - 21 Aug 2024

What is Singularity and Its Impact on Businesses? 8/403

What is Singularity and Its Impact on Businesses? 8/403 - 22 Sep 2025

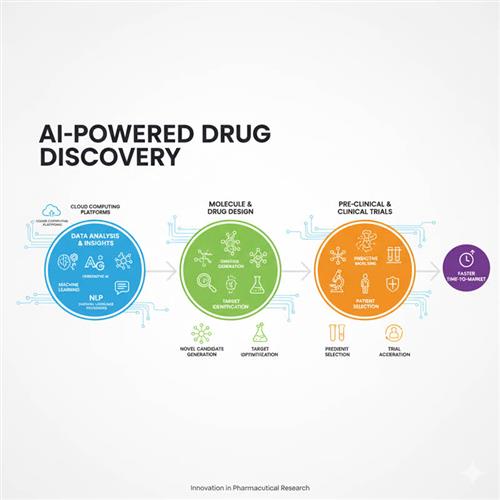

Why AI Is Critical for Accelerating Drug Discovery in Pharma 8/83

Why AI Is Critical for Accelerating Drug Discovery in Pharma 8/83 - 05 Oct 2021

Shiny Object Syndrome: Why Your Business Isn't "Going Digital" 7/350

Shiny Object Syndrome: Why Your Business Isn't "Going Digital" 7/350 - 25 Sep 2024

Enhancing Decision-Making Skills with an MBA: Data-Driven Approaches for Business Growth 6/201

Enhancing Decision-Making Skills with an MBA: Data-Driven Approaches for Business Growth 6/201 - 04 Oct 2023

The Future of Work: Harnessing AI Solutions for Business Growth 6/274

The Future of Work: Harnessing AI Solutions for Business Growth 6/274 - 15 Apr 2024

Weights & Biases: The AI Developer Platform 6/188

Weights & Biases: The AI Developer Platform 6/188 - 30 Jul 2024

The Future of IT Consulting: Trends and Opportunities 6/190

The Future of IT Consulting: Trends and Opportunities 6/190 - 27 Aug 2025

How AI Consulting Is Driving Smarter Diagnostics and Hospital Operations 6/100

How AI Consulting Is Driving Smarter Diagnostics and Hospital Operations 6/100 - 29 Aug 2025

How AI Is Transforming Modern Management Science 5/46

How AI Is Transforming Modern Management Science 5/46 - 31 Dec 2022

Future of Software Development Trends and Predictions 5/143

Future of Software Development Trends and Predictions 5/143 - 18 Jan 2024

Self-healing code is the future of software development 5/213

Self-healing code is the future of software development 5/213 - 27 Feb 2025

How AI Agents are Changing Software Development? 4/186

How AI Agents are Changing Software Development? 4/186 - 15 Aug 2025

Quantum Technology: Global Challenges and Opportunities for Innovators 4/100

Quantum Technology: Global Challenges and Opportunities for Innovators 4/100 - 05 Aug 2024

Affordable Tech: How Chatbots Enhance Value in Healthcare Software 1/168

Affordable Tech: How Chatbots Enhance Value in Healthcare Software 1/168

| About the Author | Anand Subramanian | Technology expert and AI enthusiast |

Anand Subramanian is a technology expert and AI enthusiast currently leading the marketing function at Intellectyx, a Data, Digital, and AI solutions provider with over a decade of experience working with enterprises and government departments. |

Multimodal human-computer interaction can already be experienced through current developments in the technology landscape. Agent AI enables machines to communicate effectively with humans through its combination of advanced language models with sensory technology, which removes communication gaps between humans and machines. These AI agents transcend the limitations of voice and text because they have acquired new capabilities to process visions and understand contexts, which allows them to create smooth interactions with natural responses.

Budgets and purchasing decisions for both businesses and consumers remain unclear after this development. Multimodal AI demonstrates its transformative capabilities through simultaneous processing of images and speech and text together alongside autonomous agents that learn to predict user needs to establish exceptional personal interactions.

The Rise of Multimodal Agent AI

Conventional AI systems operated on basic one-way functionality by using either chatbots for text processing or voice assistants that did not recognize visual indications. Today’s Agent AI systems combine:

- Natural Language Processing (NLP) functions as a system that processes human language both for understanding and generation of natural text.

- Computer Vision—Interpreting images, videos, and real-world environments

- Speech Recognition & Synthesis—Enabling fluid voice conversations

- Sensor Fusion—Integrating data from multiple inputs for smarter decision-making

AI agents acquire stronger processing capabilities because of the combined technological strength, which enables them to evaluate visual elements as well as discuss photography during conversations.

Key Applications of Multimodal Agent AI

Multimodal Agent AI is revolutionizing industries by enabling smarter, more natural human-computer interactions. It allows systems to process various forms of data, such as text, speech, images, and video, simultaneously, creating more efficient and personalized experiences. This is particularly beneficial in sectors like healthcare, retail, education, and customer support. AI Agent Useful Case Study demonstrates how businesses across industries can leverage this technology to transform customer engagement and operational efficiency.

1. Smarter Virtual Assistants

- Multimodal AI has transformed virtual assistant technology by combining text and speech information with images and document analysis and contextual understanding at the same time.

- The combination of AI assistants with doctors creates faster and enhanced results when the assistants evaluate medical images alongside doctors during patient examinations.

- Users uploading pictures allows Retail virtual shopping assistants to suggest similar items which results in an individualized and simple shopping journey.

The assistants now perform proactive collaborations beyond their reactive functions. Businesses looking to enhance user experience through intelligent assistants should hire AI developers skilled in integrating vision, voice, and NLP into unified systems.

2. Autonomous Customer Support

AI agents are evolving beyond scripted responses. Modern multimodal AI systems can:

- Interpret documents like invoices, screenshots, or error logs submitted during a support chat to provide faster, more accurate resolutions.

- Analyze voice tone to detect frustration or confusion and adjust their responses accordingly for better user satisfaction.

- Guide users visually, for example, by using augmented reality (AR) overlays that help customers troubleshoot hardware problems step-by-step.

This makes customer support not only more responsive but deeply personalized and empathetic.

3. Enhanced Human-Robot Collaboration

The binding relationship between artificial intelligence robots enhances production processes across manufacturing, logistics, and agricultural sectors. These robots can:

- These devices accept commands by voice so workers can easily communicate with them during factory operations.

- The system uses real-time vision capabilities to identify surrounding objects as well as people.

- The technology adapts dynamically to complex environments without needing human supervision to move within unstructured conditions.

The combination of multiple modalities provides machines with the capability to operate securely with humans in an efficient manner.

4. Immersive Education & Training

Education is being transformed by multimodal AI tutors that create rich, interactive learning experiences. These systems can:

- Explain complex topics verbally, adjusting the language and tone to the learner’s level.

- Provide visual simulations, bringing abstract concepts to life through animations or AR/VR.

- Deliver immediate feedback on written assignments or spoken language, making learning highly adaptive and effective.

Such tools are ideal for personalized education, corporate training, and lifelong learning programs.

Why Multimodal Agent AI Is a Game-Changer

- More Natural Interactions: Multimodal agents engage with users in a human-like way by combining text, voice, gestures, and visuals.

- Higher Efficiency: By understanding context across different inputs, these agents can reduce repetitive questions and streamline processes.

- Context-Aware Responses: They can tailor replies by analyzing emotions, body language, documents, or screen visuals, leading to smarter decisions.

- Broader Accessibility: Individuals with disabilities benefit greatly from voice-to-text, image-based inputs, and haptic feedback capabilities.

The Future: Where Is This Technology Heading?

- AI systems based on emotional awareness will examine body signals in combination with vocal characteristics and facial behavior to deliver human-like interactions for use across education and mental support together with consumer care.

- Future artificial intelligence agents will establish real-time multilingual translation capabilities which automatically convert meeting dialogue and written communication between any language during collaborative activities.

- Virtual models known as AI “Digital Twins” recreate human beings through programmed algorithms that duplicate behavior and autonomously operate in digital metaverse platforms and enterprise systems for autonomous interaction.

Final Thoughts

Multimodal Agent AI isn’t just an upgrade—it’s a fundamental shift in human-computer interaction. As these systems become more sophisticated, businesses that adopt them early will gain a competitive edge in user experience, automation, and innovation. Partnering with an experienced AI Agent development company can help you unlock these transformative capabilities faster, whether you're building intelligent customer support systems, advanced virtual assistants, or immersive AI-powered training platforms.

Anand Subramanian

Technology expert and AI enthusiast

Anand Subramanian is a technology expert and AI enthusiast currently leading the marketing function at Intellectyx, a Data, Digital, and AI solutions provider with over a decade of experience working with enterprises and government departments.

Link copied!

Link copied!

Recently Updated News

Recently Updated News