Static and dynamic code analysis: What is the difference?

Last updated: January 17, 2024 Read in fullscreen view

-

What is static analysis?

Static analysis is the process of analyzing code without executing it. It can detect syntax errors, coding standards violations, potential bugs, security flaws, and other quality issues. Static analysis tools can scan the entire code base or specific files, and generate reports or feedback on the code quality. Some examples of static analysis tools are SonarQube, PMD, and ESLint.

-

What is dynamic analysis?

Dynamic analysis is the process of analyzing code while it is running. It can measure the performance, behavior, and functionality of the code, and identify runtime errors, memory leaks, resource consumption, and other issues that may affect the user experience. Dynamic analysis tools can monitor the code execution, simulate user inputs, or generate test cases, and provide insights or suggestions on how to improve the code. Some examples of dynamic analysis tools are JMeter, Valgrind, and Selenium.

Static code analysis is a form of white-box testing that can help identify security issues in source code. On the other hand, dynamic code analysis is a form of black-box vulnerability scanning that allows software teams to scan running applications and identify vulnerabilities. -

How to compare accuracy?

Accuracy is the ability of an analysis tool to correctly identify and report the issues in the code, which depends on factors such as the scope, depth, and coverage of the analysis, the rules and algorithms used by the tool, and the false positives and negatives generated by the tool. To compare accuracy between different tools, you can use criteria such as precision (ratio of true positives to total positives), recall (ratio of true positives to total issues), F1-score (harmonic mean of precision and recall), severity (level of impact or risk of issues reported), and confidence (level of certainty or reliability).

-

How to compare performance?

Performance is the ability of an analysis tool to efficiently and effectively analyze code, which depends on several factors such as speed, scalability, resource consumption, code complexity and size, and integration with other tools or platforms. To compare the performance of different tools, you can use metrics such as time (duration or frequency of the analysis), throughput (amount or rate of code analyzed per unit of time), overhead (additional resources required or consumed by the tool), compatibility (degree of support for different languages, frameworks, or environments), and usability (ease of use for developers or reviewers).

-

Advantages and disadvantages of static and dynamic analysis

Static and dynamic analysis both have their advantages and disadvantages, and they work together to evaluate code quality in different ways. Static analysis can analyze the code before execution, which saves time and resources, as well as detect issues that may not be visible during runtime, such as dead code or unreachable branches. However, static analysis may generate false positives or negatives, and it may require manual configuration of rules or standards. Dynamic analysis can analyze the code in real or simulated conditions, measure performance or functionality, and identify issues that may only occur during runtime. On the other hand, dynamic analysis may not analyze the code before execution and may depend on external factors or inputs.

-

Tips and best practices for code reviews with analysis tools

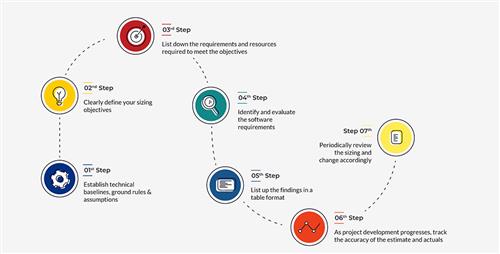

Analysis tools can help you conduct more efficient and effective code reviews, but they are not a substitute for human judgment or communication. To make the most out of your analysis tools and code reviews, consider your project goals, requirements, constraints, and preferences when selecting an analysis tool. Configure and customize your tool settings and parameters to match your project specifications. Review and verify the issues reported by your tool, prioritize and categorize them based on their severity, confidence, and impact. Communicate and collaborate with your team by sharing and discussing the tool results, using a common platform to facilitate communication. Provide clear feedback or suggestions for improvement in a respectful and constructive manner.

Link copied!

Link copied!

Recently Updated News

Recently Updated News